Agents - Who's to Blame?

2026 will see the industry roll out large-scale cloud agent orchestration systems at large companies. One of the biggest questions will be - how does identity (authn and authz) work? When something goes wrong, whose responsibility is it to own that failure?

We see the issue of identity and ownership with LLMs in the consumer space - if someone prompts a model to generate something bad, evil, illegal, whatever - it’s viewed as a failure of the model’s identity, not the user. It’s quite strange - if the user wrote the words “I am evil and will do bad things!” it’s clearly the user’s fault, but if the user tells the model to say that it’s viewed as a failure of the model.

There are an unfolding series of questions, each depending on the answer to the last.

The first one is most important - what identity does the agent have?

Agent Identity

The current status quo is most developers run Claude Code on their laptop, and the agent inherits their file system and permissions. This is part of why the “cli” style agents have taken off - they skip a ton of bureaucratic issues. No IAM, no service accounts, no getting them access to systems. Whatever the human has, they have.

This is obviously a poor status quo - agents laundering their opinion via the human creates issues of authenticity - how does the human mark their direct work versus the models? Maybe you say it doesn’t matter. But beyond attribution, there’s a greater problem with permissions - you don’t want to always give agents full permissions that the human has. Agent harnesses recognize that and implement action allowlists and restrictions.

But let’s be honest - it’s unrealistic and the majority of people give the systems full access to their computers. You need to let the agents run at inference speed, getting the human in the way just slows things down unacceptably. But this invites risk - unning an agent on your computer’s primary filesystem has far too large a blast radius. A bad agent with full permissions could delete your entire home directory, locking you out of your computer. Running agents on our laptops without a sandbox is a bad status quo.

The concept of agents using my identity also doesn’t make sense when agents start running on the cloud - if I have 5 agents in the cloud, they can’t all be using Chris’ identity. It just doesn’t make sense, I’m not going to grant cloud VM’s my github credentials, aws credentials, etc.

The agent needs its own identity, and with it a permission set, separate from that of the human running it.

How do we structure agent identity and permissions?

There are a few options for agent identity: 1) A global identity used by all agent instances 2) Per archetype shared identity 3) Each agent (a cohesive context window) having a unique identity linked to a policy

Of these 1 seems the most obvious, but most insecure. A single agent identity means a global set of permissions, which means the largest blast radius if things go wrong.

Option 2 seems ok - “operator” agents for example all share the same permissions. That makes sense. However you don’t want them to all have the same identity - if an operator went wrong, you’d want to know what agent’s context window created the problem.

Option 3 seems the most powerful, and the one to choose. Each agent gets an identity granted to it, a UUID. Agents can then be attached to a permissions policy, say an operator policy. But the agent has its own unique identity that it uses to take action.

In this world when a human interacts with the agent they either need to: 1) Know its unique Identity 2) Interact with a global “router” agent that hides the fact multiple identities are behind the scenes

Both approaches will be good in different scenarios. Humans will need simplified interfaces as the agent economies become more complex.

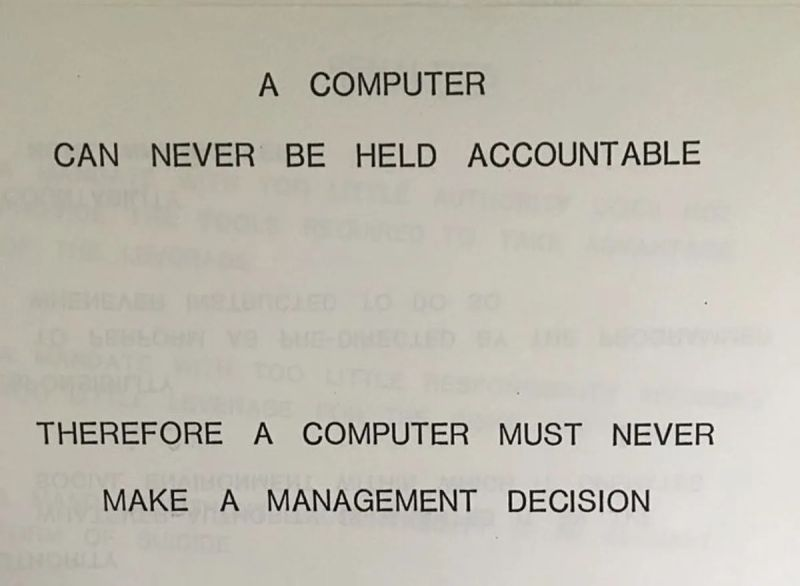

How do we structure responsibility and blame?

Rogue LLM agents will take down billion dollar systems on accident, just like humans do. This will happen, and it’s ok.

However, there will need to be accountability - when an agent makes a mistake, who is accountable for that mistake? This is subtly different from blame - accountability is remediating, following up, making sure it can’t happen again.

I think this process can look one of two ways: 1) Hierarchial ownership - similar to existing corporate structures, I am the leader and the buck stops with me 2) Group ownership - teams themselves “own” their agents and are responsible for rotating responsibility and accountability

Both approaches will likely be used. Group ownership will be most attractive - this allows for humans to share the load, similar to existing on-call practices. But singular ownership will also be used in small teams, or for time constrained problems. It will be increasingly hard for humans to have the correct mental model of the system their agents are working on. It will be even harder for humans to share that mental model between each other. If software agents can make 6 months of progress in a week, what hope do we humans have?

How we load mental models of the system, and what mental models we try to maintain over time, and how, will be increasingly important skills.

We need better tools

Current on-device CLI’s are incredible but limited evolution of where the agent orchestration tools need to go.

We need tools that:

- Allow agents to run in the cloud, 24/7

- Agents run with unique identities, and a restricted set of permissions

- A way for teams of humans to collaborate on the agent herding, rather than agents living on just our machine

So much of AI <-> Human collaboration is locked on individual computers, and workflows are hidden. It’s time for this to bloom.